Cataract Surgery¶

With 19 million cataract surgeries performed annually, cataract surgery is the most common surgical procedure in the world [1]. A cataract is a clouding of the lens, inside the eye, which leads to a decrease in vision. The purpose of the surgery is to remove this lens and replace it with an artificial lens. The entire procedure can be performed with small incisions only. The natural lens is indeed broken into small pieces with the help of a high-frequency ultrasound device before leaving the eye. As for the artificial lens, it is rolled up inside an injector before entering the eye. The surgery typically lasts 15 minutes. During the surgery, the patient’s eye is under a microscope and the output of the microscope is video-recorded.

[1] Trikha S, Turnbull AM, Morris RJ, Anderson DF, Hossain P. The journey to femtosecond laser-assisted cataract surgery: new beginnings or false dawn? Eye. 2013; 27:461–473.

Video Collection¶

A dataset of 100 videos from 50 cataract surgeries has been collected. Surgeries were performed in Brest University Hospital between January 22, 2015 and September 10, 2015. Reasons for surgery included age-related cataract, traumatic cataract and refractive errors. Patients were 61 years old on average (minimum: 23, maximum: 83, standard deviation: 10). There were 38 females and 12 males. Informed consent was obtained from all patients. Surgeries were performed by three surgeons: a renowned expert (48 surgeries), a one-year experienced surgeon (1 surgery) and an intern (1 surgery). Surgeries were performed under an OPMI Lumera T microscope (Carl Zeiss Meditec, Jena, Germany). Two videos were recorded for each surgery: one recording tool-tissue interactions through the surgical microscope, the other one recording the surgical tray (see Fig. 1). In total, more than nine hours of surgery (i.e. 18 hours of videos) have been recorded.

|

|

| 1. surgical microscope (© copyright Zeiss) | 2. surgical tray with the articulated arm |

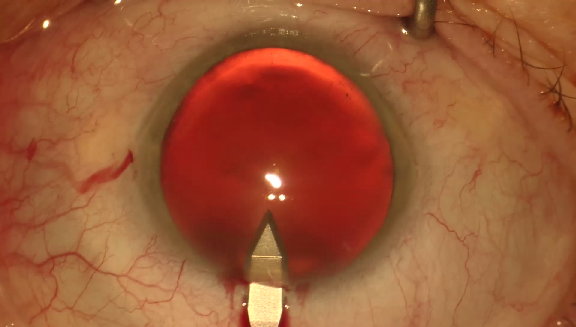

Surgical Microscope Videos¶

The first set of videos was recorded with a 180I camera (Toshiba, Tokyo, Japan) and a MediCap USB200 recorder (MediCapture, Plymouth Meeting, USA). The frame definition was 1920x1080 pixels and the frame rate was approximately 30 frames per second.

Surgical Tray Videos¶

The second set of videos was recorded with an HDR-PJ5301 video camera (Sony, Tokyo, Japan) attached to the surgical tray by an articulated arm. The frame definition was 1920x1080 pixels and the frame rate was 50 frames per second.

Video Synchronization¶

The two video recordings were started manually, by pressing two different buttons. Thereforefore, pairs of videos from the same surgery had to be synchronized retrospectively (see Fig. 2). Synchronization was based on tool usage analysis: videos were synchronized in such a way that no tool appears both on the tray and under the microscope (see Fig. 3). Tray videos were resampled to match the frame rate of the microscope videos. When necessary, empty frames (black frames) were added in microscope or tray videos to ensure that each frame in the microscope video is associated with exactly one frame in the tray video.

|

|

| 1. surgical microscope video | 2. surgical tray video |

|

|

Video Format¶

Because of inconsistencies among video decoders, we decided to convert each video into one directory containing one JPEG image per frame.

Reference Standard¶

Expert Annotations¶

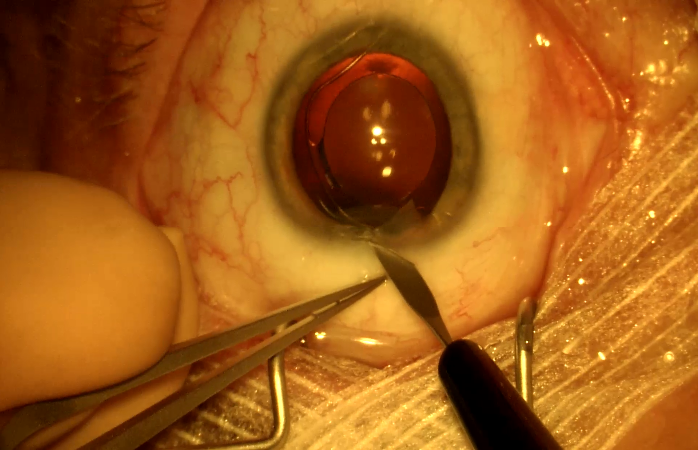

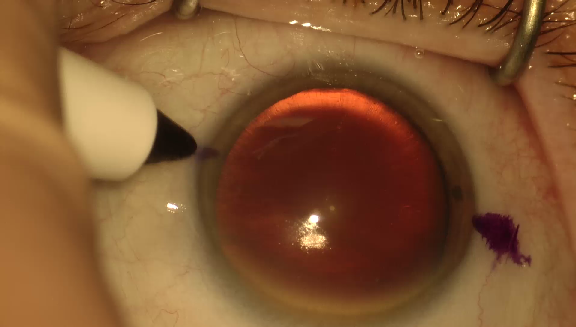

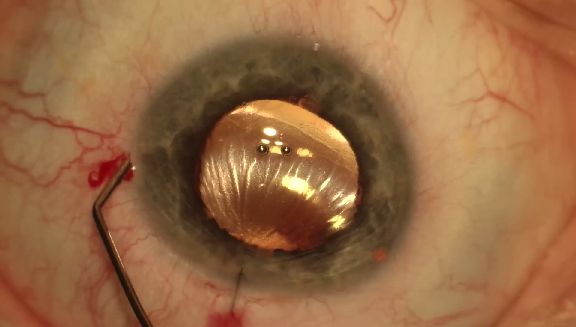

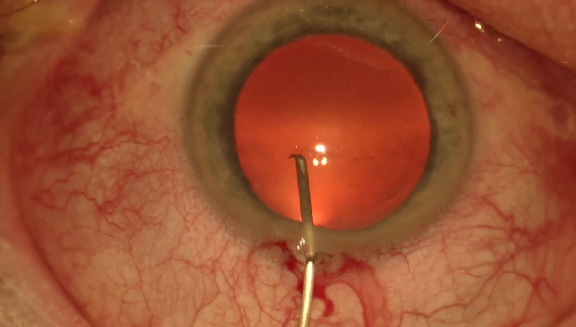

All surgical tools visible in microscope videos were first listed and labeled by the surgeons: a list of 21 surgical tools was obtained (see Fig 4).

|

|

|

|

|

|

|

| 1. biomarker | 2. Charleux cannula | 3. hydrodissection cannula | 4. Rycroft cannula | 5. viscoelastic cannula | 6. cotton | 7. capsulorhexis cystotome |

|

|

|

|

|

|

|

| 8. Bonn forceps | 9. capsulorhexis forceps | 10. Troutman forceps | 11. needle holder | 12. irrigation / aspiration handpiece | 13. phacoemulsifier handpiece | 14. vitrectomy handpiece |

|

|

|

|

|

|

|

| 15. implant injector | 16. primary incision knife | 17. secondary incision knife | 18. micromanipulator | 19. suture needle | 20. Mendez ring | 21. Vannas scissors |

Annotations were then collected for these 21 tools in each video. Annotators were two non-M.D. cataract surgery experts trained by an experienced ophthalmologist to recognize each surgical tool. They were instructed to indicate (through a web interface) when:

- a surgical tool starts or stops touching the eyeball in the microscope video,

- a surgical tool enters or exists the field of view in the tray video.

Frame-level labels were then determined automatically for each annotator. These labels are binary for microscope videos: 1 means that the tool is in contact with the eyeball, 0 means that it is not. They can be any non-negative integer in tray videos: they indicate the number of visible instances of this tool on the tray.

Adjudication¶

Next, annotations from both experts were adjudicated. Disagreements on the list of tools in contact with the eyeball, or on the list of tools visible on the surgical tray, were automatically detected. In case of disagreement, experts watched the video together and jointly determine the actual contact or visibility status. However, the precise contact or visibility timing was not adjudicated. Therefore, a real-valued reference standard was obtained. For microscope videos:

- 0 means that both experts agree that the tool is not in contact,

- 1 means that both experts agree that the tool is in contact,

- 0.5 means that experts disagree.

For tray videos, the average tool instance count is provided.

Inter-Observer Variability¶

Some statistics about the annotated videos are included below. In the microscope videos, inter-rater agreement about tool contact is measured using Cohen’s Kappa. In the tray videos, inter-rater agreement about the number of visible instances of each tool is measured using linear-weighted Cohen’s Kappa.

Kappa in Microscope Videos¶

- Before adjudication: k = 0.894 +/- 0.096

- After adjudication: k = 0.909 +/- 0.098

Linear-weighted Kappa in Tray videos¶

- Before adjudication: k = 0.752 +/- 0.257

- After adjudication: k = 0.988 +/- 0.039

We can see that the adjudication task was more complex in the tray videos: due to the large number of tools, the annotators often lost track of a few of them.

Annotation Files¶

Each video is associated with one CSV file containing one row per frame in addition to a header. Because videos were converted into directories of JPEG images, each row is in fact associated with one JPEG image. The first column contains the frame index (i.e. the name of the JPEG image without the extension). The second column contains a binary value indicating whether or not this frame is an empty frame added for synchronization purposes. The remaining 21 columns contain the real-valued reference standard for each surgical tool.

Training/Test Set Separation¶

The dataset was divided into a training set (25 pairs of synchronized videos) and a test set (25 pairs of synchronized videos). Division was made in such a way that 1) each tool appears in the same number of videos from both subsets (plus or minus one) and 2) the test set only contains videos from surgeries performed by the renowned expert. Apart from that, division was made at random.